AI Governance for Leaders: 8 Principles That Protect Your Organization

A practical framework for executives to manage risk, improve AI literacy, and make confident, audit-ready decisions.

The Leadership Risk No One Sees Until It’s Too Late

For years, executives have been told that artificial intelligence would transform everything.

What no one told them is this:

AI doesn’t become dangerous when it's powerful.

AI becomes dangerous when leadership assumes they’re in control — but they’re not.

One midsized health organization recently discovered this the hard way. A standard internal audit revealed six shadow AI tools in daily use, sensitive notes being pasted into public models, and zero visibility into who was using what, when, or why.

There was no data breach.

No lawsuit.

No scandal.

But something more expensive cracked: leadership credibility.

And that’s the part executives rarely see coming.

The truth is simple and deeply uncomfortable:

AI isn’t the risk. Uninformed leadership is.

To govern AI confidently — and to lead organizations through volatile technological cycles — executives need a new toolkit. Not a technical one. A leadership one. A governance one. A clarity-driven one.

These eight principles form the backbone of an AI Governance Framework that executive teams, boards, and service-based organizations can deploy immediately to reduce exposure, strengthen trust, and accelerate responsible innovation.

Let’s walk through them.

1. COMMAND CLARITY BEFORE COMPLEXITY

Great leaders don’t start with tools — they start with clarity.

Before anyone touches a model, they define the problem with precision.

A CFO at a regional financial services firm once asked her team to “explore AI for efficiency.” And what she received was exactly that:

Seven unrelated proofs of concept.

Six different tools.

Zero alignment.

Rising risk.

And 27 pages of output nobody could use.

The team hadn’t failed.

They’d simply followed an ambiguous brief.

And ambiguity in AI governance is never neutral. It is expensive.

Frustrated, the CFO reframed the request with sharp precision:

“Reduce end-of-month reporting time by 30% without touching any sensitive data.”

Within a week, the chaos disappeared.

The team delivered a single, governed, high-value pilot that cut reporting workloads by twenty-two hours — with no noise, no drift, and no unnecessary exposure.

This is the quiet truth behind most AI misfires:

Unclear asks create more than confusion.

They create shadow AI usage, leakage risks, compliance blind spots, duplicated effort, fragmented pilots, and ultimately — zero return on investment.

Clarity, on the other hand, does the opposite.

It produces stronger governance, cleaner decisions, tighter alignment, and outcomes that actually move the organization forward.

So before launching any AI initiative, executives must begin with the simplest and most strategic question:

“What decision, cost, or compliance burden are we trying to improve — and why?”

That question is governance as clarity.

Clarity as control.

And control as strategy.

2. ANCHOR AI IN THE WORLD YOU ACTUALLY OPERATE IN

Context protects budgets, reputations, and people.

A clinical psychology practice wanted to “use AI to improve the client experience.”

But once leadership grounded the conversation in reality — regulated clinical notes, privacy obligations, trauma-informed communication, inconsistent practitioner documentation — a completely different opportunity emerged:

Automating and standardizing clinical summaries, safety notes, and treatment plans.

Not flashy.

Not futuristic.

But operationally transformative — freeing clinicians from hours of documentation and lifting morale across the practice.

AI only delivers real value when it’s grounded in the world your people actually work in. That means understanding the rhythms of real workflows, the constraints your teams bump up against every day, the risks that quietly compound in the background, the regulatory expectations that can’t be ignored, and the humans whose decisions, judgment, and wellbeing sit at the center of it all.

When leaders operate above the waterline of reality, AI turns into hype — abstract, theoretical, detached from the work that truly matters. But when leaders ground AI decisions in the lived environment of their organization, something shifts. AI becomes leverage.

It becomes a multiplier instead of a distraction.

That’s why every AI proposal (no matter how small) should begin by mapping the real context it touches.

Who will be affected?

What data will be handled?

Which constraints shape what is truly possible?

Where does the risk surface expand?

AI governance doesn’t start with models, or features, or technical ambition.

It begins with context — because context is what keeps AI safe, useful, and aligned with the organization you’re actually leading.

3. DRAW THE RED LINES BEFORE YOU DRAW THE ROADMAP

Boundaries aren’t restrictive. They’re reputational insurance.

A mid-sized accounting practice recently learned this the hard way. Leadership launched an AI pilot to support tax preparation, scoped broadly as “assist analysts in producing draft returns.” It sounded harmless. Helpful, even. But no one paused to define what the model should never see — and what data needed ironclad protection.

Three months later, junior staff were unknowingly feeding the system client PII, pulling financial statements from unrelated folders, and drafting responses that blended sensitive information across cases. No one was careless. No one was malicious. It simply happened naturally — because there were no boundaries to prevent it.

This is the quiet truth most executives overlook: AI governance doesn’t start with enablement. It starts with prohibition. With drawing the red lines.

When boundaries aren’t explicit, pilots drift. Risk expands. Compliance weakens. Reputational damage quietly accumulates until it becomes a headline — or a courtroom problem. And by then, it’s too late to claim “we didn’t know.”

That’s why every organization needs a simple, powerful mechanism for setting the guardrails:

The Never / Sometimes / Always list.

What should AI never touch or process under any circumstances?

What can AI sometimes support, with the right oversight and safeguards?

And what should AI always log, document, or route through an auditable pathway?

This isn’t bureaucracy. It’s leadership.

Your red lines don’t limit innovation — they protect your reputation long before your roadmap ever does.

4. TIME-LOCK DECISIONS TO ACCELERATE FORESIGHT

AI evolves in months, not years — governance must keep pace.

AI doesn’t evolve on traditional strategy timelines. It moves in months, sometimes weeks—far faster than the planning cycles most organizations are built on.

This is why one board’s request for a three-year AI strategy brought everything to a halt. Their CIO paused, then answered with a clarity that shifted the entire room:

“You don’t need a three-year plan.

You need a 90-day focus.”

That one sentence reframed the whole conversation.

Instead of chasing a distant, fast-expiring vision, the board anchored itself in a much more powerful question:

“What must be true before the next board meeting to reduce risk and increase readiness?”

Instantly, the fog lifted. Priorities sharpened.

Everything became visible, manageable, actionable.

This is the core of modern AI leadership:

Recognizing that long-range predictions will almost always expire before they’re implemented.

And when strategies age faster than organizations can execute, leaders lose momentum, visibility, and confidence.

Time-locked governance cycles reverse that.

A short horizon forces clarity.

A defined window builds accountability.

A predictable rhythm ensures visibility.

A constrained timeline demands agility.

And measurable checkpoints restore control.

This is what executive AI literacy looks like in practice — not knowing every technical detail, but knowing that the environment shifts faster than the planning systems designed to manage it.

So instead of annual AI strategies, executives need rolling 90-day governance cycles, supported by live dashboards, risk registers, and consistent reporting pathways.

Short horizons don’t limit ambition.

They reduce uncertainty, speed up learning, and compound confidence over time.

5. FORCE AI TO DEFEND ITS OWN THINKING

Most leaders don’t need more answers. They need better reasoning.

A CEO recently received two AI-generated market analyses that pointed in opposite directions. Instead of choosing the one that sounded more convincing, she shifted the entire dynamic by asking a different set of questions — the kinds of questions that force intelligence, human or artificial, to reveal its thinking.

“Show me your assumptions.”

“Where is your confidence overstated?”

“What might be wrong here?”

“And what would a competitor see differently?”

It was only after the models explained themselves — their logic, their blind spots, their weak signals — that the right path became obvious.

This is the real work of AI governance.

It isn’t about gathering good answers; it’s about challenging questionable ones.

Executives who accept AI outputs at face value aren’t saving time — they’re acquiring hidden risks. Bias remains undetected. Faulty assumptions slip through. Decisions weaken. Logic becomes untraceable. Compliance and regulatory expectations shift into dangerous territory.

Explainability isn’t a luxury in this environment. It is the backbone of responsible AI.

That’s why every AI system used inside an organization — no matter how simple the task — must be able to show its reasoning. What evidence was used? Where did the data come from? How confident is the model in its conclusion? What alternative interpretations exist? What contradictions might matter?

Governance is interrogation.

Interrogation builds trust.

And trust is what builds authority — the kind that withstands audits, scrutiny, and real-world pressure.

6. PRESSURE-TEST THE FUTURE BEFORE IT HAPPENS

Every organization has blind spots that stay invisible right up until they become expensive.

AI simulations offer leaders something rare: a way to see those blind spots safely, before reality forces their hand.

A government agency recently decided to run a simple scenario through their internal AI systems:

“What happens if frontline staff use AI translation tools without approval?”

What surfaced was alarming. The simulation exposed potential privacy breaches, mistranslated safety instructions that could put lives at risk, vulnerabilities under Freedom of Information laws, and reputational fallout that would have been difficult and costly to repair.

No crisis had occurred. But the simulation illuminated exactly how one could — and that clarity reshaped the agency’s entire AI governance policy.

This is the power of simulation.

It turns unknowns into knowns. It gives leaders the ability to test consequences without paying the real-world price.

Because risk only reveals itself in two ways: through cheap simulations or through expensive reality.

Executives who use simulations wisely can explore operational failure patterns, ethical dilemmas, compliance vulnerabilities, reputational exposure, and customer-impact scenarios long before they’ve hardened into problems that demand public explanation.

Pressure-testing the future before it arrives is governance maturity in action, and it’s one of the most strategic advantages leaders can create in the age of AI.

7. HUNT FOR THE HIGHEST-LEVERAGE MOVE YOU’RE MISSING

Not every AI initiative matters — but the right one can reshape an entire organization.

A COO recently believed that their customer-facing AI tool would be the strategic win of the year. It had energy behind it. It felt innovative. It was easy to showcase. But during a leadership review, someone asked a single, clarifying question:

“What is the highest-leverage move we’re currently overlooking?”

The answer surfaced immediately, and it had nothing to do with customers.

It was compliance paperwork — repetitive, time-intensive, morale-draining tasks that were quietly consuming nearly half the team’s weekly capacity. Automating those workflows didn’t just help a little. It freed 40% of operational bandwidth almost overnight.

That one, unglamorous shift delivered more measurable value than any customer-facing initiative on the table.

This is why executives must separate excitement from leverage. Excitement feels good. Leverage changes reality.

And leverage is always the difference between:

shaving 10 hours a month,

or releasing 500 hours a quarter back into the system.reducing operational frustration,

or unlocking renewed institutional efficiency.improving communication at the margins,

or transforming the organization’s entire operating rhythm.

Executives who learn to hunt for leverage — not novelty — make better, faster, safer decisions in the age of AI.

So once a quarter, ask the question that cuts through noise:

“Where is AI returning the least — and where could it return the most?”

Because in the end, governance isn’t about doing everything.

It’s about choosing the highest-leverage move every single time.

8. INVITE STRATEGIC DISSENT BEFORE REALITY DELIVERS IT

Governance fails fastest in rooms where everyone agrees.

A CIO once presented what looked like a flawless AI roadmap to the board — clean, comprehensive, and seemingly airtight. The room nodded along, satisfied with the plan.

Everyone agreed… except the Company Secretary.

She leaned forward and asked the one question that changed everything:

“Show me where this breaks.”

That single invitation to explore failure — not success — surfaced a licensing oversight that would have resulted in a costly compliance breach.

The roadmap didn’t need applause. It needed challenge.

This is the part of AI governance executives often underestimate: dissent is not obstruction.

Dissent is a safety mechanism.

It’s the pressure release valve that prevents organizations from drifting into blind spots, groupthink, and risk accumulation.

Because risk doesn’t always arrive with warning signs.

In most organizations, it accumulates quietly — then appears all at once.

The leaders who understand this build dissent directly into their processes. They make critique part of the culture. They reward the person who asks the uncomfortable question rather than the one who stays quiet to keep the meeting moving.

So in every discussion about AI — every proposal, pilot, roadmap, or policy — make one question non-negotiable:

“What is the strongest reason this approach could fail?”

It’s simple. It’s direct. And it protects more than policies or budgets.

It protects credibility. It protects trust. It protects the organization’s future.

The most expensive failures are born in rooms where everyone agrees.

The strongest governance cultures engineer dissent long before reality forces it upon them.

AI Leadership Is Governance Leadership

In a world moving at the speed of AI, executives don’t need more tools, dashboards, or hype cycles. They need:

clarity

foresight

boundaries

context

reasoning

dissent

simulation

leverage

These eight principles form the backbone of an AI Governance Framework that turns uncertainty into authority, and risk into strategic advantage.

AI will not slow down.

But with intentional governance, you will not fall behind.

For leaders who value clarity, control, and confidence, the future isn’t something to fear.

It’s something to govern.

TL;DR — Executive Summary

AI isn’t dangerous because it’s powerful.

It becomes dangerous when leaders scale AI without clarity, guardrails, or governance.

These 8 AI governance principles give executives a practical, audit-ready framework to reduce risk, increase AI literacy, and make confident, defensible decisions.

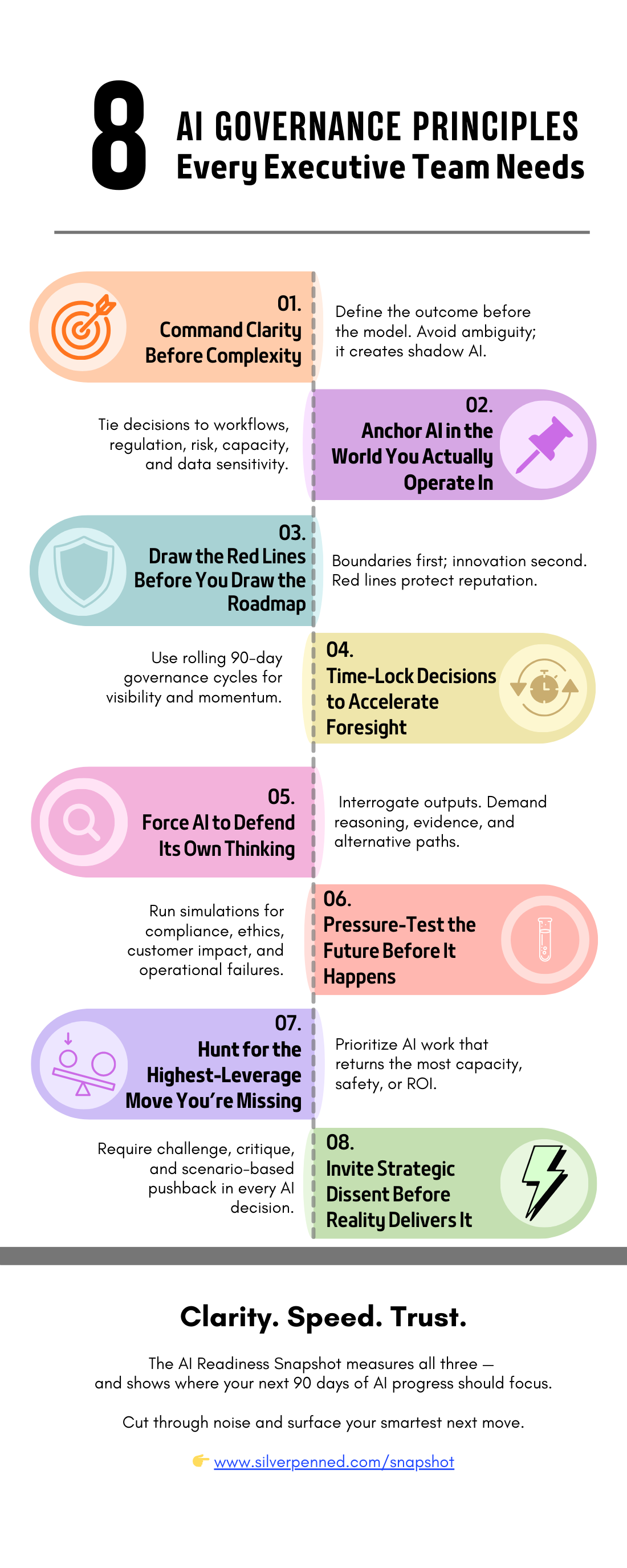

The 8 Principles Every Executive Team Must Master

1. Command Clarity Before Complexity

Define the outcome before selecting the tool or model. Ambiguity creates shadow AI, misalignment, and unnecessary risk.

2. Anchor AI in the World You Actually Operate In

Ground AI decisions in real workflows, regulations, constraints, and data sensitivity. Context prevents hype-driven mistakes.

3. Draw the Red Lines Before You Draw the Roadmap

Boundaries come first. Innovation comes second. Clear “never, sometimes, always” rules protect reputation and compliance.

4. Time-Lock Decisions to Accelerate Foresight

AI evolves faster than traditional planning cycles. Use rolling 90-day governance rhythms for momentum and visibility.

5. Force AI to Defend Its Own Thinking

Don’t accept outputs at face value. Demand reasoning, evidence, provenance, alternatives, and confidence scoring.

6. Pressure-Test the Future Before It Happens

Use simulations to uncover compliance risks, ethical failures, operational vulnerabilities, and customer impacts—before reality does.

7. Hunt for the Highest-Leverage Move You’re Missing

Prioritize AI work that produces disproportionate gains in capacity, safety, or ROI. Not everything matters equally.

8. Invite Strategic Dissent Before Reality Delivers It

Dissent is a safety feature. Build challenge and critique into every AI decision to avoid blind spots and governance failures.

Who Owns What: Organizational Accountabilities

Board & Executive Team

Set the AI governance mandate, ratify risk thresholds, monitor AI literacy and exposure, and ensure safe, strategic deployment.

Risk & Compliance

Maintain the AI Risk Register, align practices with standards (ISO 42001, OECD), conduct audits, and run failure simulations.

Technology & Data Leaders

Implement safeguarded tools, enforce audit trails and access controls, and validate explainability and reliability.

Team Leaders & Operators

Apply approved use cases, follow boundaries, report deviations, and participate in ongoing AI literacy uplift.

In short:

These principles turn AI from a source of uncertainty into a strategic advantage—helping leaders govern with clarity, accelerate responsibly, and protect the trust their organizations depend on.

AI literacy is now a leadership competency — not a technical skill.

The AI Readiness Snapshot benchmarks your organization across clarity, capability, and culture.

See your score to strengthen leadership before scaling AI.

👇👇👇